Abstract

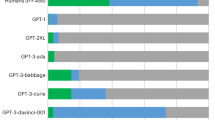

The recent advent of large language models has reinvigorated debate over whether human cognitive capacities might emerge in such generic models given sufficient training data. Of particular interest is the ability of these models to reason about novel problems zero-shot, without any direct training. In human cognition, this capacity is closely tied to an ability to reason by analogy. Here we performed a direct comparison between human reasoners and a large language model (the text-davinci-003 variant of Generative Pre-trained Transformer (GPT)-3) on a range of analogical tasks, including a non-visual matrix reasoning task based on the rule structure of Raven’s Standard Progressive Matrices. We found that GPT-3 displayed a surprisingly strong capacity for abstract pattern induction, matching or even surpassing human capabilities in most settings; preliminary tests of GPT-4 indicated even better performance. Our results indicate that large language models such as GPT-3 have acquired an emergent ability to find zero-shot solutions to a broad range of analogy problems.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data for all human behavioural experiments, along with the Digit Matrices, letter string analogy and UCLA VAT problem sets, can be downloaded from https://github.com/taylorwwebb/emergent_analogies_LLM. The four-term verbal analogy problem sets from Sternberg and Nigro17 and Jones et al.20, and the story analogy materials from Gentner et al.23, can be downloaded from http://cvl.psych.ucla.edu/resources/AnalogyInventory.zip. Information about the problem set of SAT four-term verbal analogies from Turney et al.18 can be found at https://aclweb.org/aclwiki/SAT_Analogy_Questions_(State_of_the_art).

Code availability

Code for all simulations can be downloaded from https://github.com/taylorwwebb/emergent_analogies_LLM.

References

Holyoak, K. J. in Oxford Handbook of Thinking and Reasoning (eds Holyoak, K. J. & Morrison, R. G.) 234–259 (Oxford Univ. Press, 2012).

Bassok, M. & Novick, L. R. in Oxford Handbook of Thinking and Reasoning (eds Holyoak, K. J. & Morrison, R. G.) 413–432 (Oxford Univ. Press, 2012).

Dunbar, K. N. & Klahr, D. in Oxford Handbook of Thinking and Reasoning (eds Holyoak, K. J. & Morrison, R. G.) 701–718 (Oxford Univ. Press, 2012).

Cattell, R. B. Abilities: Their Structure, Growth, and Action (Houghton Mifflin, 1971).

Snow, R. E., Kyllonen, P. C. & Marshalek, B. et al. The topography of ability and learning correlations. Adv. Psychol. Hum. Intell. 2, 103 (1984).

Mitchell, M. Abstraction and analogy-making in artificial intelligence. Ann. N. Y. Acad. Sci. 1505, 79–101 (2021).

Barrett, D., Hill, F., Santoro, A., Morcos, A. & Lillicrap, T. in International Conference on Machine Learning (eds Dy, J. & Krause, A.) 511–520 (PMLR, 2018).

Zhang, C., Gao, F., Jia, B., Zhu, Y. & Zhu, S.-C. in Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (eds Gupta, A. et al.) 5317–5327 (IEEE, 2019).

Hill, F., Santoro, A., Barrett, D. G. T., Morcos, A. S. & Lillicrap, T. P. Learning to make analogies by contrasting abstract relational structure. in 7th International Conference on Learning Representations, ICLR https://openreview.net/forum?id=SylLYsCcFm (2019).

Wu, Y., Dong, H., Grosse, R. & Ba, J. The scattering compositional learner: discovering objects, attributes, relationships in analogical reasoning. Preprint at arXiv https://doi.org/10.48550/arXiv.2007.04212 (2020).

Hersche, M., Zeqiri, M., Benini, L., Sebastian, A. & Rahimi, A. A neuro-vector-symbolic architecture for solving Raven’s progressive matrices. Nat. Mach. Intell. 5, 363–375 (2023).

Subhra Mondal, S., Webb, T. W. & Cohen, J. D. Learning to reason over visual objects. in 11th International Conference on Learning Representations, ICLR https://openreview.net/forum?id=uR6x8Be7o_M (2023).

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Process.Syst. 33, 1877–1901 (2020).

Mahowald, K. et al. Dissociating language and thought in large language models: a cognitive perspective. Preprint at arXiv https://doi.org/10.48550/arXiv.2301.06627 (2023).

Raven, J. C. Progressive Matrices: A Perceptual Test of Intelligence, Individual Form (Lewis, 1938).

Hofstadter, D. R. & Mitchell, M. in Advances in Connectionist and Neural Computation Theory Vol. 2 (eds Holyoak, K. J. & Barnden, J. A.) 31–112 (Ablex, 1994).

Sternberg, R. J. & Nigro, G. Developmental patterns in the solution of verbal analogies. Child Dev. 51, 27–38 (1980).

Turney, P. D., Littman, M. L., Bigham, J. & Shnayder, V. in Proc. International Conference on Recent Advances in Natural Language Processing (eds Angelova, G. at al.) 482–489 (RANLP, 2003).

Lu, H., Wu, Y. N. & Holyoak, K. J. Emergence of analogy from relation learning. Proc. Natl Acad. Sci. USA 116, 4176–4181 (2019).

Jones, L. L., Kmiecik, M. J., Irwin, J. L. & Morrison, R. G. Differential effects of semantic distance, distractor salience, and relations in verbal analogy. Psychon. Bull. Rev. 29, 1480–1491 (2022).

Gick, M. L. & Holyoak, K. J. Analogical problem solving. Cogn. Psychol. 12, 306–355 (1980).

Holyoak, K. J., Junn, E. N. & Billman, D. O. Development of analogical problem-solving skill. Child Dev. 55, 2042–2055 (1984).

Gentner, D., Rattermann, M. J. & Forbus, K. D. The roles of similarity in transfer: separating retrievability from inferential soundness. Cogn. Psychol. 25, 524–575 (1993).

Dasgupta, I. et al. Language models show human-like content effects on reasoning. Preprint at arXiv https://doi.org/10.48550/arXiv.2207.07051 (2022).

Srivastava, A. et al. Beyond the imitation game: quantifying and extrapolating the capabilities of language models. Transactions on Machine Learning Research https://openreview.net/forum?id=uyTL5Bvosj (2023).

Wei, J. et al. Emergent abilities of large language models. Transactions on Machine Learning Research https://openreview.net/forum?id=yzkSU5zdwD (2022).

Chan, S. C. et al. Data distributional properties drive emergent in-context learning in transformers. Adv. Neural Inf. Process. Syst. 35, 18878–18891 (2022).

Binz, M. & Schulz, E. Using cognitive psychology to understand GPT-3. Proc. Natl Acad. Sci. USA 120, e2218523120 (2023).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 31, 5998–6008 (2017).

Chen, M. et al. Evaluating large language models trained on code. Preprint at arXiv https://doi.org/10.48550/arXiv.2107.03374 (2021).

Ouyang, L. et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 36, 4299–4307 (2022).

Matzen, L. E. et al. Recreating Raven’s: software for systematically generating large numbers of Raven-like matrix problems with normed properties. Behav. Res. Methods 42, 525–541 (2010).

Matlen, B. J., Gentner, D. & Franconeri, S. L. Spatial alignment facilitates visual comparison. J. Exp. Psychol. Hum. Percept. Perform. 46, 443 (2020).

Kroger, J. K., Holyoak, K. J. & Hummel, J. E. Varieties of sameness: the impact of relational complexity on perceptual comparisons. Cogn. Sci. 28, 335–358 (2004).

Halford, G. S., Wilson, W. H. & Phillips, S. Processing capacity defined by relational complexity: implications for comparative, developmental, and cognitive psychology. Behav. Brain Sci. 21, 803–831 (1998).

Chalmers, D. J., French, R. M. & Hofstadter, D. R. High-level perception, representation, and analogy: a critique of artificial intelligence methodology. J. Exp. Theor. Artif. Intell. 4, 185–211 (1992).

Hofstadter, D. R. Fluid Concepts and Creative Analogies: Computer Models of the Fundamental Mechanisms of Thought (Basic Books, 1995).

Lovett, A. & Forbus, K. Modeling visual problem solving as analogical reasoning. Psychol. Rev. 124, 60 (2017).

Mitchell, M. Analogy-Making as Perception: A Computer Model (MIT Press, 1993).

Ichien, N., Lu, H. & Holyoak, K. J. Verbal analogy problem sets: an inventory of testing materials. Behav. Res. Methods 52, 1803–1816 (2020).

Wason, P. C. Reasoning about a rule. Q. J. Exp. Psychol. 20, 273–281 (1968).

Gentner, D. Structure-mapping: a theoretical framework for analogy. Cogn. Sci. 7, 155–170 (1983).

OpenAI. GPT-4 technical report. Preprint at arXiv https://doi.org/10.48550/arXiv.2303.08774 (2023).

Duncker, K. On problem-solving. Psychol. Monogr. 58, 1–113 (1945).

Holyoak, K. J. & Koh, K. Surface and structural similarity in analogical transfer. Mem. Cogn. 15, 332–340 (1987).

McClelland, J. L., Hill, F., Rudolph, M., Baldridge, J. & Schütze, H. Placing language in an integrated understanding system: next steps toward human-level performance in neural language models. Proc. Natl Acad. Sci. USA 117, 25966–25974 (2020).

Marcus, G. F. The Algebraic Mind: Integrating Connectionism and Cognitive Science (MIT Press, 2001).

Lake, B. M., Ullman, T. D., Tenenbaum, J. B. & Gershman, S. J. Building machines that learn and think like people. Behav. Brain Sci. 40, e253 (2017).

Webb, T. W. et al. in International Conference on Machine Learning (eds Daumé, H. III & Singh, A.) 10136–10146 (PMLR, 2020).

Falkenhainer, B., Forbus, K. D. & Gentner, D. The structure-mapping engine: algorithm and examples. Artif. Intell. 41, 1–63 (1989).

Lu, H., Ichien, N. & Holyoak, K. J. Probabilistic analogical mapping with semantic relation networks. Psychol. Rev. 129, 1078–1103 (2022).

Webb, T. W., Fu, S., Bihl, T., Holyoak, K. J. & Lu, H. Zero-shot visual reasoning through probabilistic analogical mapping. Preprint at arXiv https://doi.org/10.48550/arXiv.2209.15087 (2022).

Smolensky, P. Tensor product variable binding and the representation of symbolic structures in connectionist systems. Artif. Intell. 46, 159–216 (1990).

Holyoak, K. J. & Hummel, J. E. in Cognitive Dynamics: Conceptual Change in Humans and Machines (eds Dietrich, E. & Markman, A. B.) 229–263 (Lawrence Erlbaum Associates, 2000).

Kriete, T., Noelle, D. C., Cohen, J. D. & O’Reilly, R. C. Indirection and symbol-like processing in the prefrontal cortex and basal ganglia. Proc. Natl Acad. Sci. USA 110, 16390–16395 (2013).

Webb, T. W., Sinha, I. & Cohen, J. D. Emergent symbols through binding in external memory. in 9th International Conference on Learning Representations, ICLR https://openreview.net/forum?id=LSFCEb3GYU7 (2021).

Greff, K., Van Steenkiste, S. & Schmidhuber, J. On the binding problem in artificial neural networks. Preprint at arXiv https://doi.org/10.48550/arXiv.2012.05208 (2020).

Griffiths, T. L. Understanding human intelligence through human limitations. Trends Cogn. Sci. 24, 873–883 (2020).

Newell, A., Shaw, J. C. & Simon, H. A. Elements of a theory of human problem solving. Psychol. Rev. 65, 151 (1958).

Carpenter, P. A., Just, M. A. & Shell, P. What one intelligence test measures: a theoretical account of the processing in the Raven progressive matrices test. Psychol. Rev. 97, 404 (1990).

Penn, D. C., Holyoak, K. J. & Povinelli, D. J. Darwin’s mistake: explaining the discontinuity between human and nonhuman minds. Behav. Brain Sci. 31, 109–130 (2008).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362 (2020).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Seabold, S. & Perktold, J. in 9th Python in Science Conference (eds van der Walt, S. & Millman, J.) 92–96 (SciPy, 2010).

Hunter, J. D. Matplotlib: a 2D graphics environment. Comput. Sci. Eng. 9, 90–95 (2007).

The Pandas Development Team. pandas-dev/pandas: Pandas. Zenodo https://doi.org/10.5281/zenodo.3509134 (2020).

R Core Team. R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, 2021).

De Leeuw, J. R. jspsych: a javascript library for creating behavioral experiments in a web browser. Behav. Res. Methods 47, 1–12 (2015).

Kojima, T., Gu, S. S., Reid, M., Matsuo, Y. & Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 35, 22199–22213 (2022).

Turney, P. D. & Littman, M. L. Corpus-based learning of analogies and semantic relations. Mach. Learn. 60, 251–278 (2005).

Acknowledgements

We thank B. Snefjella and P. Turney for helpful feedback and discussions. Preparation of this paper was supported by NSF grant IIS-1956441 and AFOSR MURI grant FA9550-22-1-0380 to H.L. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

T.W., K.J.H. and H.L. conceived the project and planned experiments. T.W. implemented experiments and analysed results. T.W., K.J.H. and H.L. drafted the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks Abbas Rahimi and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Webb, T., Holyoak, K.J. & Lu, H. Emergent analogical reasoning in large language models. Nat Hum Behav 7, 1526–1541 (2023). https://doi.org/10.1038/s41562-023-01659-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-023-01659-w

This article is cited by

-

Human-like object concept representations emerge naturally in multimodal large language models

Nature Machine Intelligence (2025)

-

Fairness identification of large language models in recommendation

Scientific Reports (2025)

-

High variability in LLMs’ analogical reasoning

Nature Human Behaviour (2025)

-

Playing repeated games with large language models

Nature Human Behaviour (2025)

-

Visual cognition in multimodal large language models

Nature Machine Intelligence (2025)